October 30, 2023 | UW News

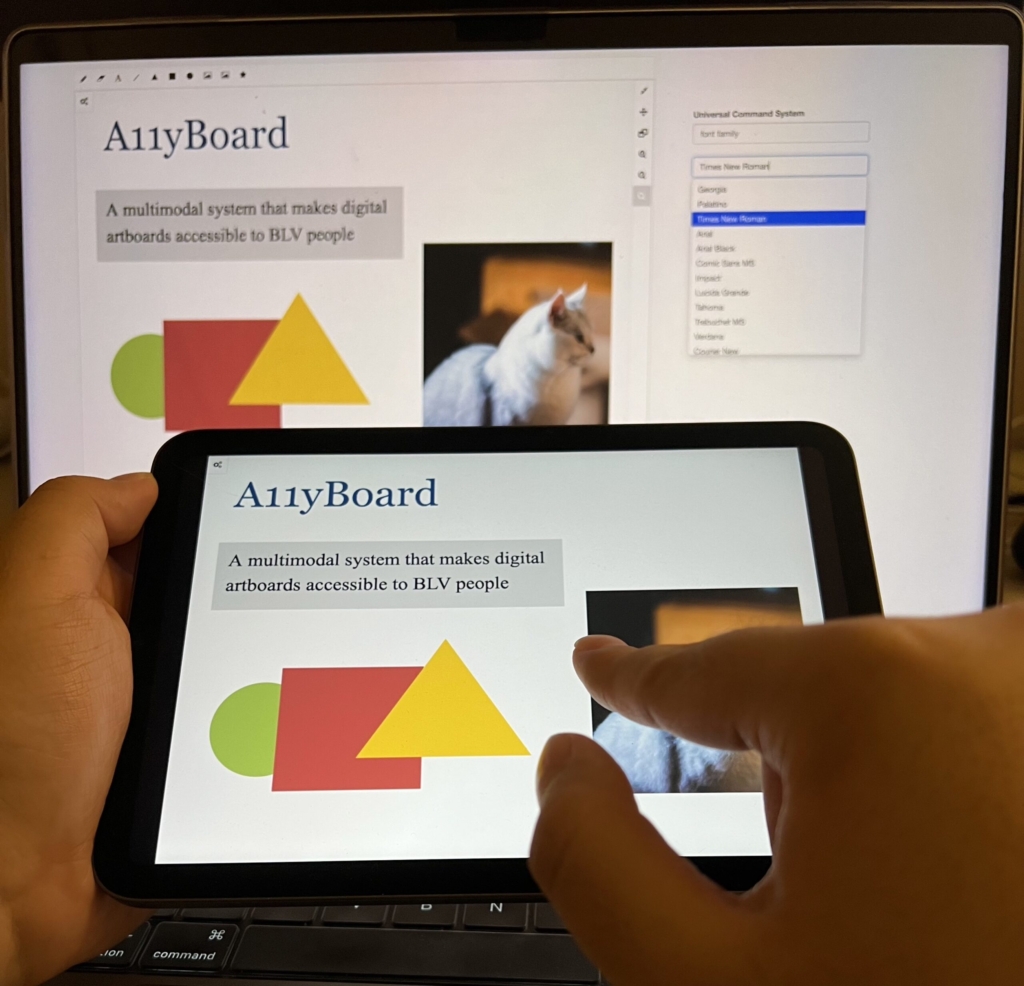

A team led by CREATE researchers has created A11yBoard for Google Slides, a browser extension and phone or tablet app that allows blind users to navigate through complex slide layouts, objects, images, and text. Here, a user demonstrates the touchscreen interface. Team members Zhuohao (Jerry) Zhang, Jacob O. Wobbrock, and Gene S-H Kim presented the research at ASSETS 2023.

Screen readers, which convert digital text to audio, can make computers more accessible to many disabled users — including those who are blind, low vision or dyslexic. Yet slideshow software, such as Microsoft PowerPoint and Google Slides, isn’t designed to make screen reader output coherent. Such programs typically rely on Z-order — which follows the way objects are layered on a slide — when a screen reader navigates through the contents. Since the Z-order doesn’t adequately convey how a slide is laid out in two-dimensional space, slideshow software can be inaccessible to people with disabilities.

Combining a desktop computer with a mobile device, A11yBoard lets users work with audio, touch, gesture, speech recognition and search to understand where different objects are located on a slide and move these objects around to create rich layouts. For instance, a user can touch a textbox on the screen, and the screen reader will describe its color and position. Then, using a voice command, the user can shrink that textbox and left-align it with the slide’s title.

“We want to empower people to create their own content, beyond a PowerPoint slide that’s just a title and a text box.”

Jacob O. Wobbrock, CREATE associate director and professor in the UW Information School

“For a long time and even now, accessibility has often been thought of as, ‘We’re doing a good job if we enable blind folks to use modern products.’ Absolutely, that’s a priority,” said senior author Jacob O. Wobbrock, a UW professor in the Information School. “But that is only half of our aim, because that’s only letting blind folks use what others create. We want to empower people to create their own content, beyond a PowerPoint slide that’s just a title and a text box.”

A11yBoard for Google Slides builds on a line of research in Wobbrock’s lab exploring how blind users interact with “artboards” — digital canvases on which users work with objects such as textboxes, shapes, images and diagrams. Slideshow software relies on a series of these artboards. When lead author Zhuohao (Jerry) Zhang, a UW doctoral student in the iSchool, joined Wobbrock’s lab, the two sought a solution to the accessibility flaws in creativity tools, like slideshow software. Drawing on earlier research from Wobbrock’s lab on the problems blind people have using artboards, Wobbrock and Zhang presented a prototype of A11yBoard in April. They then worked to create a solution that’s deployable through existing software, settling on a Google Slides extension.

For the current paper, the researchers worked with co-author Gene S-H Kim, an undergraduate at Stanford University, who is blind, to improve the interface. The team tested it with two other blind users, having them recreate slides. The testers both noted that A11yBoard greatly improved their ability to understand visual content and to create slides themselves without constant back-and-forth iterations with collaborators; they needed to involve a sighted assistant only at the end of the process.

The testers also highlighted spots for improvement: Remaining continuously aware of objects’ positions while trying to edit them still presented a challenge, and users were forced to do each action individually, such as aligning several visual groups from left to right, instead completing these repeated actions in batches. Because of how Google Slides functions, the app’s current version also does not allow users to undo or redo edits across different devices.

Ultimately, the researchers plan to release the app to the public. But first they plan to integrate a large language model, such as GPT, into the program.

“That will potentially help blind people author slides more efficiently, using natural language commands like, ‘Align these five boxes using their left edge,’” Zhang said. “Even as an accessibility researcher, I’m always amazed at how inaccessible these commonplace tools can be. So with A11yBoard we’ve set out to change that.”

This research was funded in part by the University of Washington’s Center for Research and Education on Accessible Technology and Experiences (UW CREATE). For more information, contact Zhang at zhuohao@uw.edu and Wobbrock at wobbrock@uw.edu.

This article was adapted from the UW News article by Stefan Milne.