One of the most pressing and important changes in computing has been the advent of practically useful and widely available AI, which is impacting almost every aspect and application of computing.

If we do not immediately and thoughtfully explore AI’s implications for people with disabilities, we risk them being left behind in the use of AI and the accessibility of its products, and subject to AI biases. At the same time, as CREATE Director Jennifer Mankoff and Ph.D. student Kate Glazko noted in the journal Nature, AI has enormous potential to support disabled needs and ways of being.

CREATE’s recent ethnographic work has demonstrated that today’s newest AI tools, generative AI, can potentially provide a number of accessibility advantages, while also presenting challenges such as verifiability and ableism. However, whether or not these problems have been solved, people with disabilities are actively and frequently using AI on a daily basis now. We must understand the impact and the implications of this.

CREATE awarded $4.6M for research on AI risks, opportunities for people with disabilities

September 10, 2024 We are excited to announce that CREATE has been awarded a five-year, $4.6 million grant to advance crucial research on artificial intelligence (AI) and generative artificial intelligence (GAI). Research and development projects will explore questions about recent developments in AI and GAI: What risks do they pose for people with disabilities? And…

Here are some of the ways CREATE is exploring the impact of AI on people with disabilities:

Ableism and AI

We must develop inclusive datasets, measure AI biases, and teach community and industry partners about the risks and potential for AI to impact disability.

CREATE’s investigation into AI ableism is still in its early stages.

- We explore the topic in our recent paper, An Autoethnographic Case Study of Generative Artificial Intelligence’s Utility for Accessibility, and are building on that to explore bias in specific contexts.

- We published a paper at the 2024 ACM Conference on Fairness, Accountability, and Transparency (ACM FAccT), Identifying and Improving Disability Bias in GPT-Based Resume Screening, that documented significant bias against jobseekers whose resumes mention their disability even in the context of a leadership award or scholarship, in comparison to the same resumes with one less award or scholarship.

- We have also written about AI risks in recent policy documents, such as our comments on the Federal RFI regarding Biometrics.

Autoethnographic study of generative AI for accessibility

Generative artificial intelligence tools like ChatGPT and Midjourney can potentially assist people with various disabilities. They could summarize content, compose messages, or describe images. Yet, when seven CREATE researchers tested AI tools utility for accessibility, they found the tools regularly spout inaccuracies and fail at basic reasoning, perpetuating ableist biases.

AI for communication

ASL recognition

Most existing information resources (like search engines, news sites, or social media) are written or

spoken, and do not offer equitable access for the 70 million deaf people world-wide who primarily use a sign language and for whom a written language like English is not their native language.

- CREATE faculty member Richard Ladner and a team that includes his former student Danielle Bragg and current CREATE Ph.D. student Aashaka Desai are working with Microsoft to design ASL recognition datasets for sign language development, which has profound implications around issues of fairness, ethics, and responsible AI development.

- Desai also led a team of DHH scholars in highlighting systematic biases in AI sign language research.

Non-speech audio recognition for hard of hearing people

CREATE researchers, including Dhruv Jain, Ph.D. ’22, with advisors Leah Findlater and Jon E. Froehlich are examining how deaf and hard of hearing (DHH) people think about and relate to sounds in the home – from mundane beeps and whirs to dog barks and children’s shouts, solicit feedback and reactions to initial domestic sound awareness systems, and explore potential concerns.

With input from DHH participants on their perceptions of and experiences with sound in the home and their feedback on initial sound awareness mockups, the team designed and evaluated three tablet-based sound awareness prototypes. Key findings suggest a general interest in smarthome-based sound awareness systems alongside significant concerns related to privacy, activity tracking, cognitive overload, and trust.

SoundWatch

The team also introduced SoundWatch, an Android app that provides glanceable, always-available sound feedback on smartwatches for d/Deaf and hard-of-hearing users. Created by a diverse team at the UW and led by a hard-of-hearing innovator who wanted to build for his community, SoundWatch uses machine learning to alert its users to important sounds occurring in their environment.

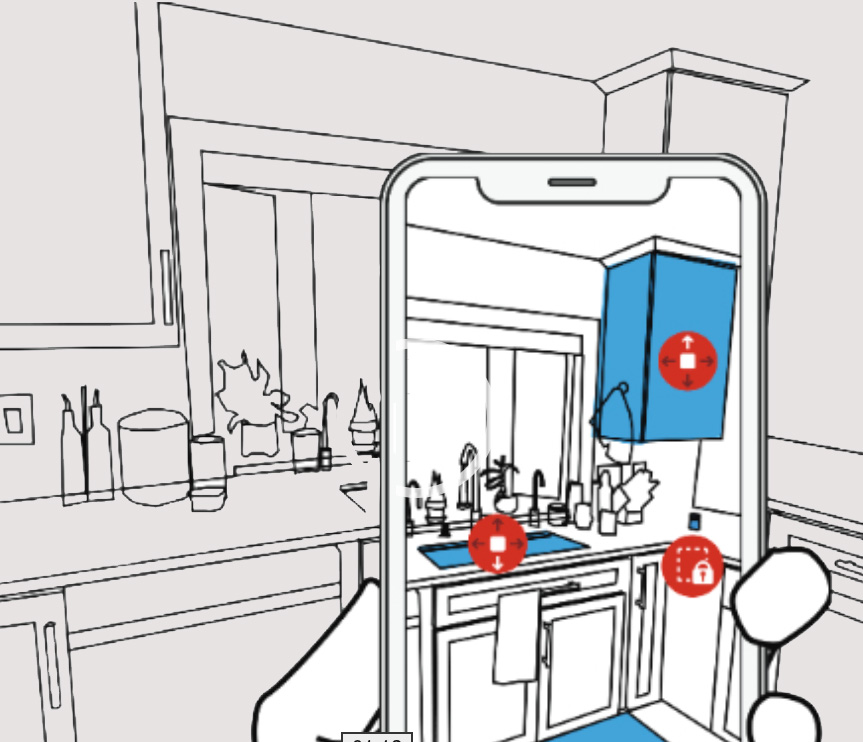

Augmented reality captioning

Leah Findlater and Jon E. Froehlich are also working with Raja Kushalnagar to design, build, and evaluate new augmented reality (AR) approaches to real-time captioning for people who are deaf and hard of hearing. In contrast to traditional captioning, which uses an external, fixed display (e.g., laptop or large screen), our approach allows users to manipulate the shape, number and placement of captions in 3D space.

Preliminary findings suggest that, compared to traditional laptop-based captions, HMD captioning may increase glanceability, improve visual contact with speakers, and support access to other visual information (e.g., slides).

AI support for speech and communication therapy

CREATE faculty member and chair of the UW Human Centered Design & Engineering department, Julie Kientz leads formative research and co-design activities around the opportunities and challenges for AI support for speech-language pathologists who work with children with speech and language difficulties.

Jod: Videoconferencing Platform for Mixed Hearing Groups

Informed by prior work examining accessibility barriers in current videoconferencing platforms, lead author Anant Mittal, with advisor and CREATE associate director James Fogarty as a co-author, designed and developed Jod, a videoconferencing platform to facilitate communication in mixed hearing groups. Using Jod, the team conducted mixed-hearing group sessions. Their paper includes findings, insights for future videoconferencing designs, and recommendations for conducting mixed-hearing studies.

AI for health and activities of daily living

ARTennis

Jaewook Lee and Jon E. Froehlich seek to broaden participation in team sports for people with low-vision. ARTennis is their prototype for wearable augmented reality (AR) that utilizes real-time computer vision (CV) to enhance the visual saliency of tennis balls.

Hand function assessment interface

CREATE associate directors Katherine M. Steele and Heather Feldner, postdoctoral researcher Sasha Portnova, along with UW Rehabilitation Medicine professor Chet Moritz and his students Adria Robert-Gonzalez and Emily Boeschoten, are co-designing a video-based interface for hand function assessment in individuals with motor control impairments using AI techniques.

AI for physical spaces and transportation

CREATE associate director Jon E. Froehlich leads a suite of research projects that make use of AI to design, build, and evaluate interactive technology that addresses high value social issues such as environmental sustainability, computer accessibility, and personalized health and wellness.

- Room Accessibility and Safety Scanning in Augmented Reality (RASSAR)

RASSAR, with CREATE Ph.D. student Xia Su as student lead, is a proof-of-concept prototype that uses LiDAR and camera data, machine learning, and augmented reality (AR) to semi-automatically identify, categorize, and localize indoor accessibility and safety issues. These assessment instruments—bolstered by advances in computer vision, AR, and mobile sensors—enable homeowners and trained experts to audit and improve home safety.

- BusStopCV uses real-time computer vision in the web browser to collect data on accessibility-related features at bus stops such as the presence of benches, shelters, and accessible landing features.

- Project Sidewalk combines computer vision with human crowdsourcing to scalably collect data on sidewalk accessibility.

- Robotic Accessibility Indoor Scanner (RAIS) is a semi-autonomous robot that uses LiDAR and computer vision to create 3D reconstructions of indoor space and detect inaccessible corridors, furniture, countertops, and more.

AI for digital accessibility & visualization

VoxLens for accessible visualizations

VoxLens is a JavaScript plugin that allows people to interact with visualizations. Working with screen-reader users, CREATE graduate student Ather Sharif and CREATE associate director Jacob O. Wobbrock have shown that VoxLens users completed specific tasks with 122% increased accuracy and 36% decreased interaction time compared to participants who did not have access to the tool. To implement VoxLens, visualization designers add just one line of code.

CREATE researchers are using large language models (LLMs) to support Blind/Visually Impaired (BVI) users’ control over their visualization experience. Two projects are in very early stages, but exciting, nonetheless: CREATE Ph.D. candidate Venkatesh Potluri is working on data science visualization and AccessComputing team member Brianna Wimer is working on diagram understanding.

AI for interaction support

Accessible biosignal gesture interface prototyping

Former CREATE postdoctoral student Momona Yamagami, CREATE Director Jennifer Mankoff and associate director Jacob O. Wobbrock are developing new algorithms to support accessible biosignal gesture interface prototyping for non-expert developers.

Visual privacy algorithms and tools

Along with collaborators at UC Boulder and UIUC, CREATE associate director Leah Findlater is working on a project to help blind and low vision people safeguard private visual content.

Evaluating the accessibility of a hand-tracking device for device interactions

CREATE Ph.D. students Sasha Portnova (current) and Momona Yamagami (graduated in 2023) have worked on evaluating the accessibility of a hand-tracking device for device interactions for individuals with upper-body disabilities. They used AI techniques to assess the accuracy of Leap Motion Controller. PIs: Jennifer Mankoff, Katherine M. Steele, Heather Feldner.

RECENT NEWS

Articles on AI and machine learning research at CREATE.

Blind and Low Vision Teens Join CREATE through YES2 Summer Internships

September 3, 2025 This summer, CREATE hosted three high school/undergraduate interns through a program that focuses on career preparation for young Washingtonians who are blind or visually disabled. Left to right: YES2 intern Susanna Haley, DUB Research Experience for Undergraduates (REU) student Kaija Frierson, YES2 intern Mohammed Al-jawadi, YES2 intern Kaleb The Washington Department of…

James Fogarty inducted into SIGCHI Academy

May 13, 2025 CREATE associate director James Fogarty has been inducted into the SIGCHI Academy Class of 2025. Each class of the the ACM Special Interest Group on Computer-Human Interaction represents the principal leaders of the field, whose research has helped shape how we think of HCI. A central figure in Seattle’s human-computer interaction (HCI) community…

Not just a wheelchair: Disability representation in AI

November 19, 2024 CREATE Ph.D. graduate Kelly Avery Mack led research that investigated how AI represented people with disabilities. Specifically, Mack's team wanted to know if AI-produced images and image descriptions perpetuated bias or showed positive portrayals of disability. The team included four research scientists from Google Research: Rida Qadri, Remi Denton, new CREATE Advisory…

CREATE awarded $4.6M for research on AI risks, opportunities for people with disabilities

September 10, 2024 We are excited to announce that CREATE has been awarded a five-year, $4.6 million grant to advance crucial research on artificial intelligence (AI) and generative artificial intelligence (GAI). Research and development projects will explore questions about recent developments in AI and GAI: What risks do they pose for people with disabilities? And…

CREATE researchers find ChatGPT biased against resumes that imply disability, models improvement

June 24, 2024 Generative Artificial Intelligence (GAI) tools can be useful and help people meet currently unaddressed access needs, but we need to acknowledge that risks such as bias exist, and be proactive as a field in finding accessible ways to validate GAI outputs. This is the takeaway from CREATE Ph.D. student Kate Glazko, who…