Just about everybody in business, education, and artistic settings needs to use presentation software like Microsoft PowerPoint, Google Slides, and Adobe Illustrator. These tools use artboards to hold objects such as text, shapes, images, and diagrams. But for blind and low vision (BLV) people, using such software adds a new level of challenge beyond keeping our bullet points short and images meaningful. They experience:

- High added cognitive load

- Difficulty determining relationships between objects

- Uncertainty if an operation has been successful

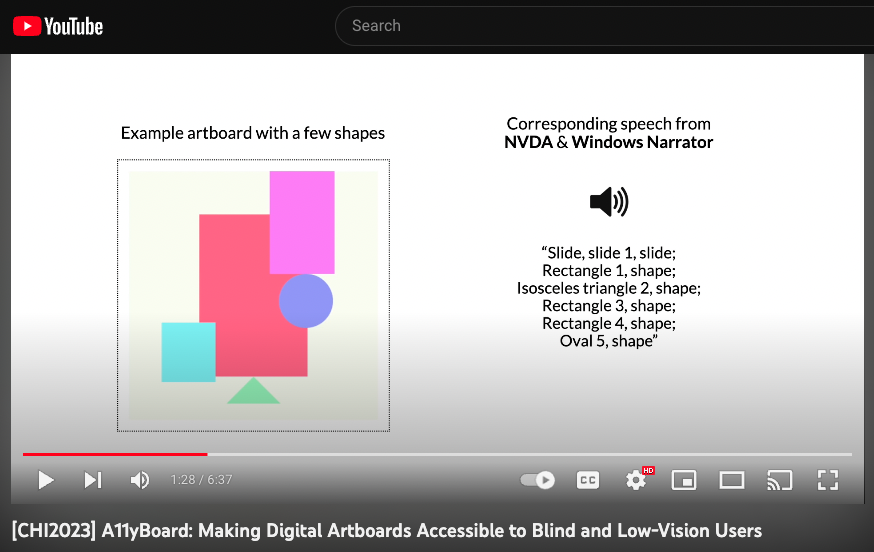

Screen readers, which were built for 1-D text information, don’t handle 2-D information spaces like artboards well.

For example, NVDA and Windows Narrator would only report artboard objects in their Z-order – regardless of where those objects are located or whether they are visually overlapping – and only report its shape name without any other useful information.

To address these challenges Zhuohao (Jerry) Zhang, a CREATE Ph.D. student advised by Jacob O. Wobbrock at the ACE Lab, asked:

- Can digital artboards in presentation software be made accessible for blind and low-vision users to read and edit on their own?

- Can we design interaction techniques to deliver rich 2-D information to screen reader users?

The answer is yes!

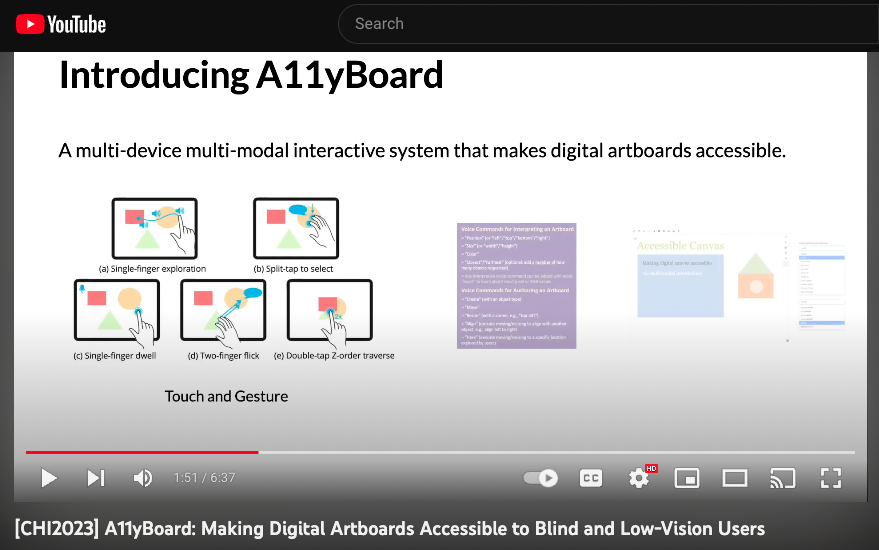

They developed a multidevice, multimodal interaction system – A11yBoard – to mirror the desktop’s canvas on a mobile touchscreen device, and enabled rapid finger-driven screen reading via touch, gesture, and speech.

Blind and low-vision users can explore the artboard by using a “reading finger” to move across objects and receive audio tone feedback. They can also use a second finger to “split-tap” on the screen to receive detailed information and select this object for further interactions.

“Walkie-talkie mode,” when turned on by dwelling a finger on the screen like turning on a switch, lets users “talk” to the application.

Users can therefore access tons of details and properties of objects and their relationships. For example, they can ask for a number of closest objects to understand what objects are near to explore. As for some operations that are not easily manipulable using touch, gesture, and speech, we also designed an intelligent keyboard search interface to let blind and low-vision users perform all object-related tasks possible.

Through a series of evaluations with blind users, A11yBoard was shown to provide intuitive spatial reasoning, multimodal access to objects’ properties and relationships, and eyes-free reading and editing experience of 2-D objects.

Currently, much digital content has been made accessible for blind and low-vision people to read and “digest.” But few technologies have been introduced to make the creation process accessible to them so that blind and low-vision users can create visual content on their own. With A11yBoard, we have gained a step towards a bigger goal – to make heavily visual-based content creation accessible to blind and low-vision people.

Paper author Zhuohao (Jerry) Zhang is a second-year Ph.D. student at the UW iSchool. His work in HCI and accessibility focuses on designing assistive technologies for blind and low-vision people. Zhang has published and presented at CHI, UIST, and ASSETS conferences, receiving a CHI best paper honorable mention award, a UIST best poster honorable mention award, and a CHI Student Research Competition Winner, and featured by Microsoft New Future of Work Report 2022. He is advised by CREATE Co-Director Jacob O. Wobbrock.

- Zhang’s dissertation: A11yBoard: Making Digital Artboards Accessible to Blind and Low-Vision Users

- Complete Introducing A11yBoard video: