December 16, 2023

People with low vision (LV) have had fewer options for physical activity, particularly in competitive sports such as tennis and soccer that involve fast, continuously moving elements such as balls and players. A group of researchers from CREATE associate director Jon E. Froehlich‘s Makeability Lab hopes to overcome this challenge by enabling LV individuals to participate in ball-based sports using real-time computer vision (CV) and wearable augmented reality (AR) headsets. Their initial focus has been on tennis.

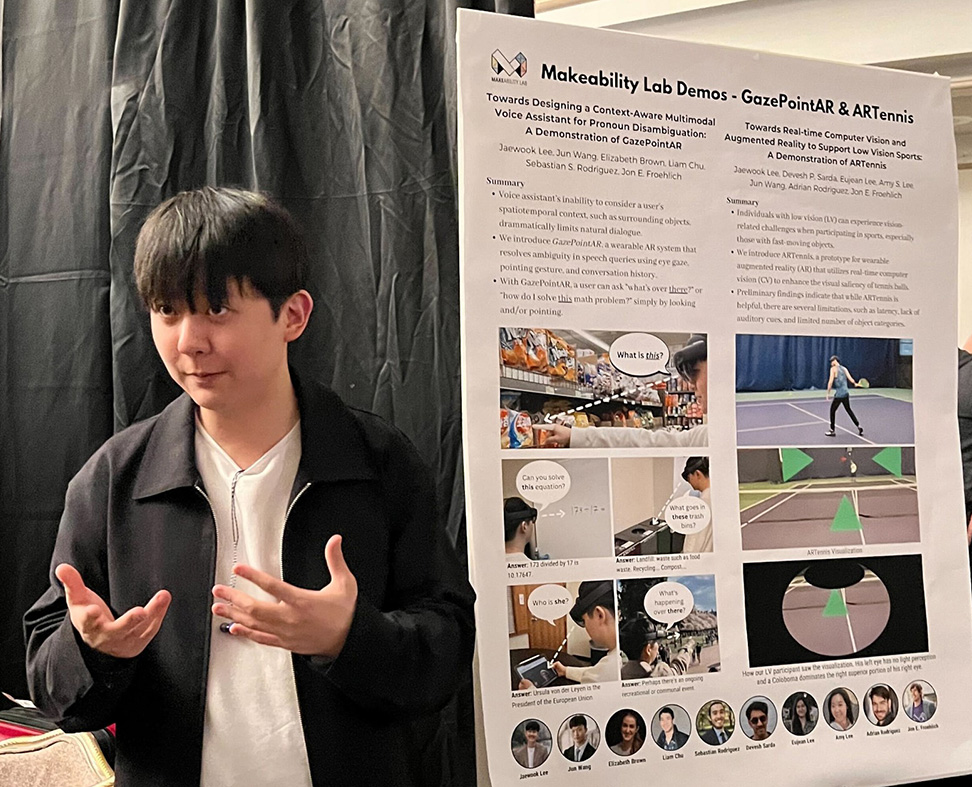

The team includes Jaewook Lee (Ph.D. student, UW CSE), Devesh P. Sarda (MS/Ph.D. student, University of Wisconsin), Eujean Lee (Research Assistant, UW Makeability Lab), Amy Seunghyun Lee (BS student, UC Davis), Jun Wang (BS student, UW CSE), Adrian Rodriguez (Ph.D. student, UW HCDE), and Jon Froehlich.

Their paper, Towards Real-time Computer Vision and Augmented Reality to Support Low Vision Sports: A Demonstration of ARTennis was published in the 2023 ACM Symposium on User Interface Software and Technology (UIST).

ARTennis is their prototype system capable of tracking and enhancing the visual saliency of tennis balls from a first-person point-of-view (POV). Recent advancements in deep learning have led to models like TrackNet, a neural network capable of tracking tennis balls in third-person recordings of tennis games that is used to improve sports viewing for LV people. To enhance playability, the team first built a dataset of first-person POV images by having the authors wear an AR headset and play tennis. They then streamed video from a pair of AR glasses to a back-end server, analyzed the frames using a custom-trained deep learning model, and sent back the results for real-time overlaid visualization.

After a brainstorming session with an LV research team member, the team added visualization improvements to enhance the ball’s color contrast and add a crosshair in real-time.

Early evaluations have provided feedback that the prototype could help LV people enjoy ball-based sports but there’s plenty of further work to be done. A larger field-of-view (FOV) and audio cues would improve a player’s ability to track the ball. The weight and bulk of the headset, in addition to its expense are also factors the team expects to improve with time, as Lee noted in an interview on Oregon Public Broadcasting.

“Wearable AR devices such as the Microsoft HoloLens 2 hold immense potential in non-intrusively improving accessibility of everyday tasks. I view AR glasses as a technology that can enable continuous computer vision, which can empower BLV individuals to participate in day-to-day tasks, from sports to cooking. The Makeability Lab team and I hope to continue exploring this space to improve the accessibility of popular sports, such as tennis and basketball.”

Jaewook Lee, Ph.D. student and lead author