Video games often pose accessibility barriers to gamers with disabilities, but there is no standard method for identifying which games have barriers, what those barriers are, and whether and how they can be overcome. CREATE and Allen School Ph.D. student Jesse Martinez has been working to understand the strategies and resources gamers with disabilities regularly use when trying to identify a game to play and the challenges disabled gamers face in this process, with the hopes of advising the games industry on how better support disabled members of their audience.

Martinez, with CREATE associate directors James Fogarty and Jon Froehlich as project advisors and co-authors, published the team’s findings for the ACM CHI conference on Human Factors in Computing Systems (CHI 2024).

Martinez will present the paper, Playing on Hard Mode: Accessibility, Difficulty and Joy in Video Game Adoption for Gamers With Disabilities, virtually at the hybrid conference, and will present it in person at UW DUB’s upcoming para.chi.dub event.

Martinez’s passion for this work came from personal experience: as someone who loves playing all kinds of games, he has spent lots of time designing new ways to play games to make them accessible for himself and other friends with disabilities. He also has experience working independently as a game and puzzle designer and has consulted on accessibility for tabletop gaming studio Exploding Kittens, giving him a unique perspective on how game designers create games and how disabled gamers hack them.

First, understand the game adoption process

The work focuses on the process of “Game Adoption”, which includes everything from learning about a new game (game discovery), learning about it to see if it’s a good fit for one’s taste and access needs (game evaluation), and getting set up with the game and making any modifications necessary to improve the overall experience (game adaptation). As Martinez notes in the paper, gamers with disabilities already do work to make gaming more accessible, so it’s very important not to overlook this work when designing new solutions.

To explore this topic, Martinez interviewed 13 people with a range of disabilities and very different sets of access needs. In the interviews, they discussed what each person’s unique game adoption process looked like, where they encountered challenges, and how they would want to see things change to better support their process.

Game discovery

In discussing game discovery, the team found that social relationships and online disabled gaming communities were the most valuable resource for learning about new games. Game announcements often don’t come with promises of being accessible. But if a friend suggested a game, it often meant the friend had already considered whether the game had a chance of being accessible. Participants also mentioned that since there is no equivalent to a games store for accessible games, it was sometimes hard to learn about new games. In their recommendations, Martinez suggests game distributors like Steam and Xbox work to support this type of casual browsing of accessible games.

Game evaluation

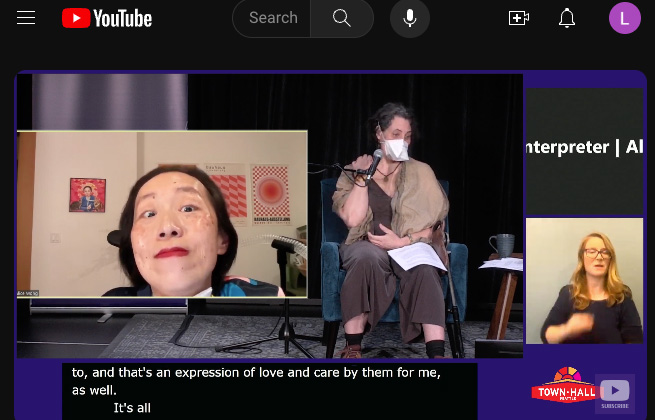

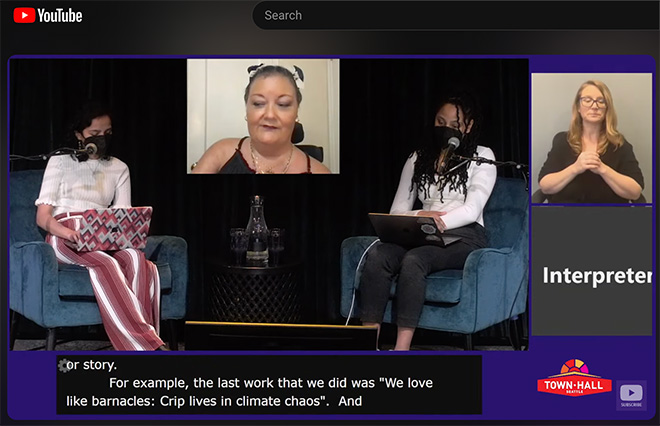

In discussing game evaluation, the team found that community-created game videos on platforms like YouTube and Twitch were useful for making accessibility judgments. Interestingly, the videos didn’t need to be accessibility-focused, since just seeing how the game worked was useful information. One participant in the study highlights the accessibility options menus in their Twitch streams, and asks streamers to do likewise, since this information can be tricky to find online.

Game adaptation

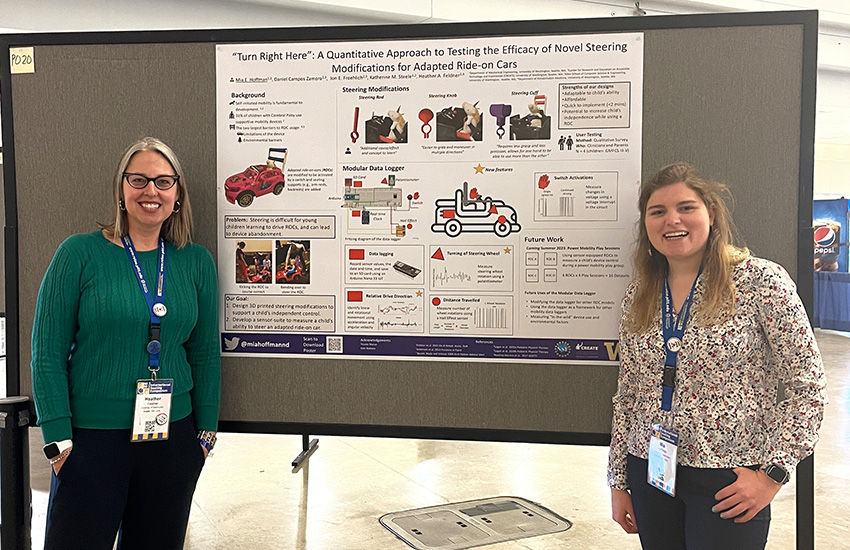

Martinez and team discovered many different approaches people took to make a game accessible to them, starting with enabling accessibility features like captions or getting the game to work with their screen reader. Some participants designed their own special tools to make the system work, such as a 3D-printed wrist mount for a gaming mouse. Participants shared that difficulty levels in a game are very important accessibility resources, especially when inaccessibility in the game already made things harder.

The important thing is that players be allowed to choose what challenges they want to face, rather than being forced to play on “hard mode” if they don’t want to.

Other participants discussed how they change their own playstyle to make the game accessible, such as playing as a character who fights with a ranged weapon or who can teleport across parts of the game world. Others went even further, creating their own new objectives in the game that better suited what they wanted from their experience. This included ignoring the competitive part of the racing game Mario Kart to just casually enjoy driving around its intricate worlds, and participating in a friendly roleplaying community in GTA V where they didn’t have to worry about the game’s fast-paced missions and inaccessible challenges.

Overcoming inaccessible games

Martinez uses all this context to introduce two concepts to the world of human-computer interaction and accessibility research: “access difficulty” and “disabled gaming.”

“Access difficulty” is how the authors describe the challenges created in a game specifically due to inaccessibility, which are different from the challenges a game designer intentionally creates to make the game harder. The authors emphasize that the important thing is that players be allowed to choose what challenges they actually want to face, rather than being forced to play on “hard mode” compared to nondisabled players.

“Disabled gaming” acknowledges the particular way gamers with disabilities play games, which is often very different from how nondisabled people play games. Disabled gaming is about taking the game you’re presented and turning it into something fun however you can, regardless of whether that’s what the game designer expects or wants you to do.

Martinez and his co-authors are very excited to share this work with the CREATE community and the world, and they encourage anyone interested in participating in a future study of disabled gaming to join the #study-recruitment channel. If you’re not on CREATE’s Slack, request to join.